设置GPU 1 2 from tensorflow.config.experimental import list_physical_devices, set_memory_growthphysical_devices = list_physical_devices('GPU' )

[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

1 set_memory_growth(physical_devices[0 ], True )

数据读取 数据集下载

1 2 import numpy as npimport tensorflow as tf

1 files = np.load('mnist.npz' )

1 2 3 4 x_train = files['x_train' ] x_test = files['x_test' ] y_train = files['y_train' ] y_test = files['y_test' ]

1 2 3 4 print (x_train.shape)print (x_test.shape)print (y_train.shape)print (y_test.shape)

(60000, 28, 28)

(10000, 28, 28)

(60000,)

(10000,)

1 2 3 4 x_train = np.expand_dims(x_train, -1 ) x_test = np.expand_dims(x_test, -1 ) print (x_train.shape)print (x_test.shape)

(60000, 28, 28, 1)

(10000, 28, 28, 1)

1 2 3 4 5 6 n = 3000 import matplotlib.pyplot as pltplt.title('y=' + str (y_train[n])) plt.imshow(x_train[n].reshape((28 , 28 )), cmap='gray' ) plt.show()

模型的搭建 1 2 3 4 5 from tensorflow import kerasfrom tensorflow.keras import layersfrom tensorflow.keras import lossesfrom tensorflow.keras import metricsfrom tensorflow.keras import optimizers

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 model = keras.Sequential(name='LeNet5' ) model.add(keras.layers.Conv2D(filters=6 , kernel_size=(5 , 5 ), strides=(1 , 1 ), input_shape=(28 , 28 , 1 ), activation='relu' )) model.add(keras.layers.MaxPool2D()) model.add(keras.layers.Conv2D(filters=16 , kernel_size=(5 , 5 ), strides=(1 , 1 ), activation='relu' )) model.add(keras.layers.MaxPool2D()) model.add(keras.layers.Flatten()) model.add(keras.layers.Dense(units=120 , activation='relu' )) model.add(keras.layers.Dense(units=84 , activation='relu' )) model.add(keras.layers.Dense(units=10 , activation='softmax' ))

Model: "LeNet5"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 24, 24, 6) 156

max_pooling2d (MaxPooling2D (None, 12, 12, 6) 0

)

conv2d_1 (Conv2D) (None, 8, 8, 16) 2416

max_pooling2d_1 (MaxPooling (None, 4, 4, 16) 0

2D)

flatten (Flatten) (None, 256) 0

dense (Dense) (None, 120) 30840

dense_1 (Dense) (None, 84) 10164

dense_2 (Dense) (None, 10) 850

=================================================================

Total params: 44,426

Trainable params: 44,426

Non-trainable params: 0

_________________________________________________________________

模型的编译 1 model.compile (optimizer='adam' , loss=losses.sparse_categorical_crossentropy, metrics=metrics.sparse_categorical_accuracy)

模型的训练 1 model.fit(x_train, y_train, epochs=20 , batch_size=64 , validation_split=0.2 )

Epoch 1/20

750/750 [==============================] - 7s 5ms/step - loss: 0.7774 - sparse_categorical_accuracy: 0.8722 - val_loss: 0.1533 - val_sparse_categorical_accuracy: 0.9541

Epoch 2/20

750/750 [==============================] - 3s 4ms/step - loss: 0.1345 - sparse_categorical_accuracy: 0.9605 - val_loss: 0.1094 - val_sparse_categorical_accuracy: 0.9671

Epoch 3/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0900 - sparse_categorical_accuracy: 0.9730 - val_loss: 0.0919 - val_sparse_categorical_accuracy: 0.9734

Epoch 4/20

750/750 [==============================] - 4s 5ms/step - loss: 0.0693 - sparse_categorical_accuracy: 0.9791 - val_loss: 0.0940 - val_sparse_categorical_accuracy: 0.9712

Epoch 5/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0582 - sparse_categorical_accuracy: 0.9814 - val_loss: 0.0920 - val_sparse_categorical_accuracy: 0.9747

Epoch 6/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0501 - sparse_categorical_accuracy: 0.9844 - val_loss: 0.0806 - val_sparse_categorical_accuracy: 0.9769

Epoch 7/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0465 - sparse_categorical_accuracy: 0.9863 - val_loss: 0.0805 - val_sparse_categorical_accuracy: 0.9756

Epoch 8/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0390 - sparse_categorical_accuracy: 0.9874 - val_loss: 0.0713 - val_sparse_categorical_accuracy: 0.9808

Epoch 9/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0382 - sparse_categorical_accuracy: 0.9879 - val_loss: 0.0710 - val_sparse_categorical_accuracy: 0.9803

Epoch 10/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0333 - sparse_categorical_accuracy: 0.9893 - val_loss: 0.0630 - val_sparse_categorical_accuracy: 0.9842

Epoch 11/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0324 - sparse_categorical_accuracy: 0.9896 - val_loss: 0.0648 - val_sparse_categorical_accuracy: 0.9828

Epoch 12/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0280 - sparse_categorical_accuracy: 0.9909 - val_loss: 0.0733 - val_sparse_categorical_accuracy: 0.9826

Epoch 13/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0251 - sparse_categorical_accuracy: 0.9923 - val_loss: 0.0714 - val_sparse_categorical_accuracy: 0.9827

Epoch 14/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0276 - sparse_categorical_accuracy: 0.9913 - val_loss: 0.0641 - val_sparse_categorical_accuracy: 0.9847

Epoch 15/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0228 - sparse_categorical_accuracy: 0.9932 - val_loss: 0.0857 - val_sparse_categorical_accuracy: 0.9820

Epoch 16/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0190 - sparse_categorical_accuracy: 0.9941 - val_loss: 0.0786 - val_sparse_categorical_accuracy: 0.9829

Epoch 17/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0203 - sparse_categorical_accuracy: 0.9940 - val_loss: 0.0730 - val_sparse_categorical_accuracy: 0.9834

Epoch 18/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0206 - sparse_categorical_accuracy: 0.9937 - val_loss: 0.0772 - val_sparse_categorical_accuracy: 0.9848

Epoch 19/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0201 - sparse_categorical_accuracy: 0.9941 - val_loss: 0.0881 - val_sparse_categorical_accuracy: 0.9836

Epoch 20/20

750/750 [==============================] - 3s 4ms/step - loss: 0.0182 - sparse_categorical_accuracy: 0.9942 - val_loss: 0.0915 - val_sparse_categorical_accuracy: 0.9830

<keras.callbacks.History at 0x1f66d29fa00>

模型的验证 1 model.evaluate(x_test, y_test)

313/313 [==============================] - 1s 3ms/step - loss: 0.0656 - sparse_categorical_accuracy: 0.9858

[0.06561200320720673, 0.98580002784729]

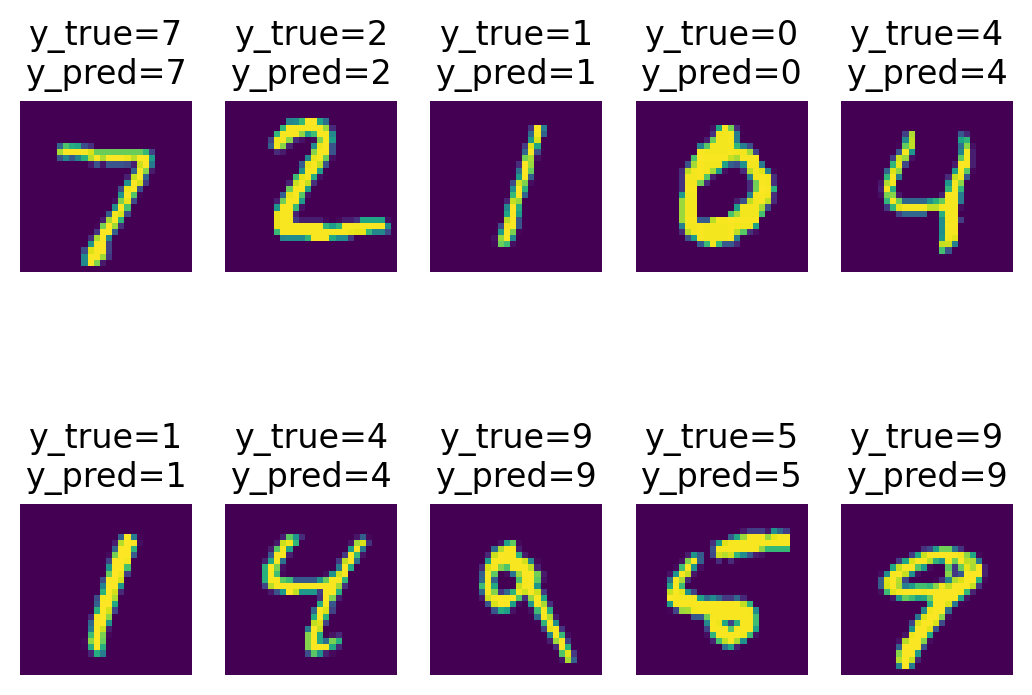

模型的测试 1 2 3 y_pred = model.predict(x_test[:10 ]).argmax(axis=1 ) print (y_pred)print (y_test[:10 ])

1/1 [==============================] - 0s 18ms/step

[7 2 1 0 4 1 4 9 5 9]

[7 2 1 0 4 1 4 9 5 9]

1 2 3 4 5 6 7 plt.figure(dpi=200 ) for i in range (len (x_test[:10 ])): ax = plt.subplot(2 ,5 ,i+1 ) ax.axis('off' ) ax.set_title(f'y_true={y_test[i]} \ny_pred={y_pred[i]} ' ) ax.imshow(x_test[i].reshape((28 , 28 ))) plt.show()