Hadoop集群规划

hadoop102

hadoop103

hadoop104

HDFS

NameNode DataNode

SecondaryNameNode

YARN

NodeManager

ResourceManager NodeManager

这里的规划方式和前面我们讲到的一致。

相关IP配置如下所示:

宿主机网络信息:

IP:192.168.128.66

GATEWAY:192.168.128.2

DNS1:192.168.128.2

集群网络配置:

hadoop102:192.168.128.102

hadoop103:192.168.128.103

hadoop104:192.168.128.104

hadoop基础集群 由于Hadoop集群安装步骤比较复杂,所以在这里我们采用了分步安装,一共安装了6个镜像和3个容器。

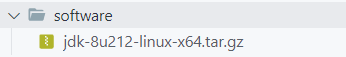

镜像配置 Java8镜像 Java8镜像:用于创建用户和搭建JAVA8环境。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FROM centos:7.7 .1908 LABEL minglog="luoming@tipdm.com" RUN useradd minglog \ && echo 'minglog:123456' | chpasswd \ && echo 'root:123456' | chpasswd \ && mkdir -p /opt/module && mkdir -p /opt/software \ && chmod -R 777 /opt USER minglogCOPY --chown =minglog:minglog software/jdk-*.tar.gz /opt/software RUN tar -zxvf /opt/software/jdk-*.tar.gz -C /opt/module/ RUN rm -rf /opt/software/* ENV JAVA_HOME=/opt/module/jdk1.8.0 _212ENV PATH=$JAVA_HOME/bin:$PATHUSER root

SSH镜像 SSH镜像:用于为镜像安装ssh服务,以及一些后续会使用到的工具。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 FROM centos_java8:1.0 LABEL minglog="luoming@tipdm.com" RUN yum install -y epel-release ` && yum clean all && yum makecache \ && yum install -y openssh-server openssh-clients net-tools vim sshpass ` && sed -i '/^HostKey/' d /etc/ssh/sshd_config ` && echo 'HostKey /etc/ssh/ssh_host_rsa_key' >> /etc/ssh/sshd_config ` && ssh-keygen -t rsa -b 2048 -f /etc/ssh/ssh_host_rsa_key ` && echo "minglog ALL=(ALL) NOPASSWD:ALL" >> /etc/sudoers EXPOSE 22 RUN echo '#!/bin/bash' >> /etc/profile.d/run.sh RUN echo 'nohup /usr/sbin/sshd -D > /dev/null 2> /dev/null & ' >> /etc/profile.d/run.sh RUN chmod +x /etc/profile.d/run.sh

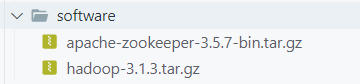

Base镜像 Base镜像:该镜像是为所有的Hadoop节点安装公共服务(例如:hadoop,zookeeper等等。)、相关常用Shell脚本,并修改相关配置文件。

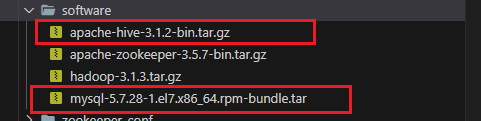

software文件夹:存放需要用到的安装压缩包,如下所示:

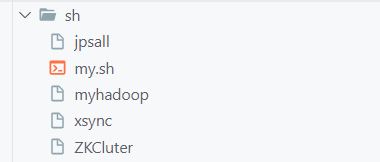

sh文件夹:存放所有常用的Shell脚本,如下所示:

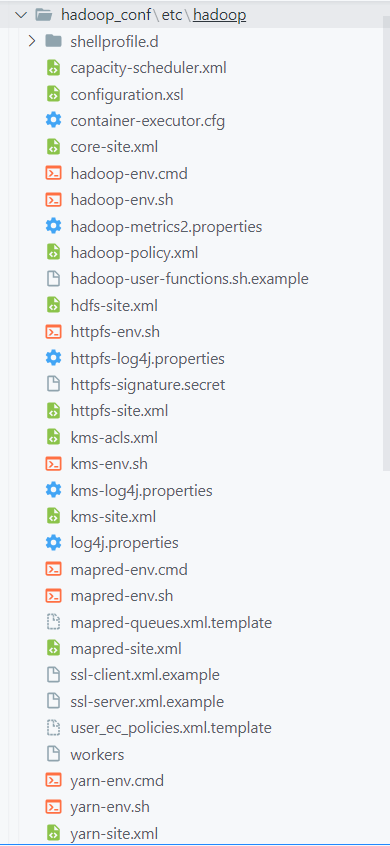

hadoop_conf文件夹:Hadoop基础配置文件。

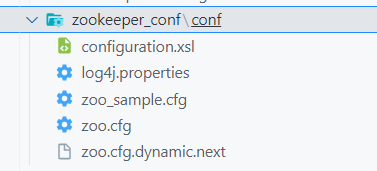

zookeeper_conf文件夹:zookeeper配置文件。

Dockerfile文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 FROM centos_ssh:1.0 LABEL minglog="luoming@tipdm.com" ARG hadoop=/opt/module/hadoop-3.1 .3 ARG zookeeper=/opt/module/zookeeper-3.5 .7 RUN yum install -y epel-release net-tools vim RUN yum install -y psmisc nc rsync lrzsz ntp libzstd openssl-static tree iotop git cronie sshpass USER minglogCOPY --chown =minglog:minglog software/hadoop-3.1.3.tar.gz /opt/software RUN tar -zxvf /opt/software/hadoop-3.1.3.tar.gz -C /opt/module/ ` && rm -rf /opt/software/* COPY --chown =minglog:minglog software/apache-zookeeper-3.5.7-bin.tar.gz /opt/software RUN tar -zxvf /opt/software/apache-zookeeper-3.5.7-bin.tar.gz -C /opt/module/ ` && mv /opt/module/apache-zookeeper-3.5.7-bin ${zookeeper} \ && rm -rf /opt/software/* RUN mkdir /home/minglog/bin/ COPY --chown =minglog:minglog sh/* /home/minglog/bin/ RUN chmod 777 -R /home/minglog/bin COPY --chown =minglog:minglog hadoop_conf/ ${hadoop} / COPY --chown =minglog:minglog zookeeper_conf/ ${zookeeper} / EXPOSE 22 -65535 USER rootCOPY sh/my.sh /etc/profile.d/

Hadoop102镜像 Hadoop102镜像:配置ntp时间同步和zookeeper中的myid。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 FROM hadoop_base:1.0 LABEL minglog="luoming@tipdm.com" RUN mkdir /root/.ssh ` && ssh-keygen -t rsa -N '' -f /root/.ssh/id_rsa -q USER minglogRUN mkdir /home/minglog/.ssh ` && ssh-keygen -t rsa -N '' -f /home/minglog/.ssh/id_rsa -q USER rootRUN echo "restrict 192.168.128.0 mask 255.255.255.0 nomodify notrap" >> /etc/ntp.conf \ && sed -i '/^server/' d /etc/ntp.conf ` && echo "server 127.127.1.0" >> /etc/ntp.conf \ && echo "fudge 127.127.1.0 stratum 10" >> /etc/ntp.conf \ && echo "SYNC_HWCLOCK=yes" >> /etc/sysconfig/ntpd USER minglogRUN mkdir /opt/module/zookeeper-3.5.7/zkData \ && echo "2" >> "/opt/module/zookeeper-3.5.7/zkData/myid" USER root

Hadoop103镜像 Hadoop103镜像:用来配置与Hadoop102时间同步和zookeeper中的myid。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 FROM hadoop_base:1.0 LABEL minglog="luoming@tipdm.com" USER minglogRUN mkdir /home/minglog/.ssh ` && ssh-keygen -t rsa -N '' -f /home/minglog/.ssh/id_rsa -q \ && mkdir /opt/module/zookeeper-3.5.7/zkData \ && echo "3" >> "/opt/module/zookeeper-3.5.7/zkData/myid" USER rootRUN mkdir /root/.ssh ` && ssh-keygen -t rsa -N '' -f /root/.ssh/id_rsa -q \ && mkdir -p /var/spool/cron \ && echo "*/1 * * * * /usr/sbin/ntpdate hadoop102" >> /var/spool/cron/root

Hadoop104镜像 Hadoop104镜像:用来配置与Hadoop102时间同步和zookeeper中的myid。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 FROM hadoop_base:1.0 LABEL minglog="luoming@tipdm.com" USER minglogRUN mkdir /home/minglog/.ssh ` && ssh-keygen -t rsa -N '' -f /home/minglog/.ssh/id_rsa -q \ && mkdir /opt/module/zookeeper-3.5.7/zkData \ && echo "4" >> "/opt/module/zookeeper-3.5.7/zkData/myid" USER rootRUN mkdir /root/.ssh ` && ssh-keygen -t rsa -N '' -f /root/.ssh/id_rsa -q \ && mkdir -p /var/spool/cron \ && echo "*/1 * * * * /usr/sbin/ntpdate hadoop102" >> /var/spool/cron/root

批量安装脚本 root_run.sh 这个脚本是用来给宿主机创建minglog用户、配置hosts文件并安装docker和相关工具。

刚创建好的Centos系统,直接运行脚本即可。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 #!/bin/bash useradd minglog && echo 'minglog:123456' | chpasswd echo "minglog ALL=(ALL) NOPASSWD:ALL" >> /etc/sudoerssudo echo "192.168.128.66 docker" >> /etc/hosts sudo echo "192.168.128.102 hadoop102" >> /etc/hosts sudo echo "192.168.128.103 hadoop103" >> /etc/hosts sudo echo "192.168.128.104 hadoop104" >> /etc/hosts echo "正在下载相关工具" yum -y install epel-release && sudo yum makecache yum -y install net-tools vim network zip unzip yum-utils device-mapper-persistent-data lvm2 wget bridge-utils sshpass cronie yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo echo "正在安装docker..." yum -y install docker-ce docker-ce-cli containerd.io usermod -aG docker minglog systemctl restart docker && sudo systemctl enable docker && sudo systemctl status docker echo "docker安装完成"

一键配置Hadoop容器集群 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 #!/bin/bash mkdir -p /home/minglog/bin && cp dockerHadoop /home/minglog/bin/ && sudo chmod +x /home/minglog/bin/dockerHadoopmkdir ~/Docker && mv Hadoop_Docker.zip ~/Docker/Hadoop_Docker.zip && cd ~/Dockerecho "开始解压所有docker相关文件" unzip Hadoop_Docker.zip && rm -rf Hadoop_Docker.zip echo "######### 开始构建镜像 #########" read -p "是否清空当前所有容器和镜像(建议清空,防止冲突)[y/n](n): " is_delif [ "${is_del} " == 'y' ] || [ "${is_del} " == 'Y' ] ; then echo "" echo "开始删除所有容器...." if [ -n "$(docker ps -aq) " ]; then docker rm -f $(docker ps -aq) fi echo "" echo "开始清空当前所有镜像...." if [ -n "$(docker images -aq) " ]; then docker rmi -f $(docker images -a) fi fi echo "所有容器和镜像清空成功!" echo "## 开始构建Java8镜像 ##" cd Java8 && docker build -t centos_java8:1.0 . && cd ..echo "## Java8镜像构建完成 ##" echo "## 开始构建SSH镜像 ##" cd SSH && docker build -t centos_ssh:1.0 . && cd ..echo "## SSH镜像构建完成 ##" echo "## 开始构建hadoop_base镜像 ##" cd Base && docker build -t hadoop_base:1.0 . && cd ..echo "## hadoop_base镜像构建完成 ##" for host in hadoop102 hadoop103 hadoop104do echo "## 开始构建$host 镜像 ##" cd $host && docker build -t $host :1.0 . && cd ..echo "## $host 镜像构建完成 ##" done echo "######### 所有镜像构建完成 #########" echo "######### 开始创建容器 #########" for host in hadoop102 hadoop103 hadoop104do echo "## 开始创建容器$host ##" docker docker run -d -it \ --name $host \ --privileged=true \ --add-host=docker:192.168.128.66 \ --add-host=hadoop102:192.168.128.102 \ --add-host=hadoop103:192.168.128.103 \ --add-host=hadoop104:192.168.128.104 \ --hostname $host $host :1.0 /usr/sbin/init echo "## 容器$host 创建完成 ##" done echo "######### 容器构建完成 #########" echo "######### 开始组网 #########" sudo wget https://gitee.com/mirrors/Pipework/raw/master/pipework -P /usr/bin && sudo chmod 777 /usr/bin/pipework sudo brctl addbr br0; \ sudo ip link set dev br0 up; \ sudo ip addr del 192.168.128.66/24 dev ens33; \ sudo ip addr add 192.168.128.66/24 dev br0; \ sudo brctl addif br0 ens33; \ sudo ip route add default via 192.168.128.2 dev br0 echo "## 网桥br0创建成功 ##" echo "## 将容器网络搭上网桥 ##" sudo pipework br0 hadoop102 192.168.128.102/24@192.168.128.2 sudo pipework br0 hadoop103 192.168.128.103/24@192.168.128.2 sudo pipework br0 hadoop104 192.168.128.104/24@192.168.128.2 echo "## 所有容器搭上网桥 ##" sudo rm -rf /home/minglog/.ssh/known_hosts /root/.ssh/known_hosts if [ ! -e /home/minglog/.ssh/id_rsa ]then sudo ssh-keygen -t rsa -N '' -f /root/.ssh/id_rsa -q ssh-keygen -t rsa -N '' -f /home/minglog/.ssh/id_rsa -q fi for host in hadoop102 hadoop103 hadoop104do sudo sshpass -p 123456 ssh-copy-id -o StrictHostKeyChecking=no root@$host sshpass -p 123456 ssh-copy-id -o StrictHostKeyChecking=no minglog@$host done for host in hadoop102 hadoop103 hadoop104do for host2 in hadoop102 hadoop103 hadoop104 do ssh $host "sshpass -p 123456 ssh-copy-id -o StrictHostKeyChecking=no minglog@$host2 " sudo ssh root@$host "sshpass -p 123456 ssh-copy-id -o StrictHostKeyChecking=no root@$host2 " done done if [ $(docker ps -aq|wc -l) -eq 3 ]; then read -p "检测到当前容器安装完成,是否清空所有配置文件[y/n](n): " is_del if [ "${is_del} " == 'y' ] || [ "${is_del} " == 'Y' ] ; then echo "" echo "清空当前所有配置文件...." sudo rm -rf /home/minglog/Docker/* echo "配置文件清空完成...." else echo "配置文件已保留..." fi clear echo "*******************************************" echo "* 恭喜你,环境安装成功! *" echo "*******************************************" echo "" else "**** 环境异常,请重新安装!!! ****" fi

dockerHadoop管理脚本 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 case $1 in "start" ){ echo "============= 启动Hadoop集群容器 =============" echo "开启所有容器" docker start $(docker ps -aq) echo "######### 开始组网 #########" sudo wget https://gitee.com/mirrors/Pipework/raw/master/pipework -P /usr/bin && sudo chmod 777 /usr/bin/pipework sudo brctl addbr br0; \ sudo ip link set dev br0 up; \ sudo ip addr del 192.168.128.66/24 dev ens33; \ sudo ip addr add 192.168.128.66/24 dev br0; \ sudo brctl addif br0 ens33; \ sudo ip route add default via 192.168.128.2 dev br0 echo "## 网桥br0创建成功 ##" echo "## 将容器网络搭上网桥 ##" sudo pipework br0 hadoop102 192.168.128.102/24@192.168.128.2 sudo pipework br0 hadoop103 192.168.128.103/24@192.168.128.2 sudo pipework br0 hadoop104 192.168.128.104/24@192.168.128.2 echo "## 所有容器搭上网桥 ##" sudo ssh hadoop102 "systemctl restart ntpd && systemctl status ntpd" if [ ! -e /home/minglog/.ssh/id_rsa ] then sudo ssh-keygen -t rsa -N '' -f /root/.ssh/id_rsa -q ssh-keygen -t rsa -N '' -f /home/minglog/.ssh/id_rsa -q fi for host in hadoop102 hadoop103 hadoop104 do sudo sshpass -p 123456 ssh-copy-id -o StrictHostKeyChecking=no root@$host sshpass -p 123456 ssh-copy-id -o StrictHostKeyChecking=no minglog@$host done for host in hadoop102 hadoop103 hadoop104 do for host2 in hadoop102 hadoop103 hadoop104 do ssh $host "sshpass -p 123456 ssh-copy-id -o StrictHostKeyChecking=no minglog@$host2 " sudo ssh root@$host "sshpass -p 123456 ssh-copy-id -o StrictHostKeyChecking=no root@$host2 " done done if [ $(docker ps -q|wc -l) -eq 3 ]; then clear echo "============= 集群容器启动成功! =============" fi };; "stop" ){ echo "============= 关闭Hadoop集群容器 =============" docker stop $(docker ps -aq) clear echo "============= Hadoop集群容器关闭成功 =============" };; "restart" ){ echo "============= 重启Hadoop集群容器 =============" docker restart $(docker ps -aq) clear echo "============= Hadoop集群容器重启成功 =============" };; *){ echo "输入错误,请输入(start,stop,restart)中的一个。" exit };; esac

添加hive的hadoop集群 hive在前面基础的Hadoop环境下增加了Mysql和Hive服务安装。

Base镜像 主要需要去修改Base镜像的Dockerfile

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 FROM centos_ssh:1.0 LABEL minglog="luoming@tipdm.com" ARG hadoop=/opt/module/hadoop-3.1 .3 ARG zookeeper=/opt/module/zookeeper-3.5 .7 ARG hive=/opt/module/hive-3.1 .2 RUN yum install -y epel-release net-tools vim RUN yum install -y psmisc nc rsync lrzsz ntp libzstd openssl-static tree iotop git cronie sshpass libaio sudo numactl USER minglogCOPY --chown =minglog:minglog software/hadoop-3.1.3.tar.gz /opt/software RUN tar -zxvf /opt/software/hadoop-3.1.3.tar.gz -C /opt/module/ ` COPY --chown =minglog:minglog software/apache-zookeeper-3.5.7-bin.tar.gz /opt/software RUN tar -zxvf /opt/software/apache-zookeeper-3.5.7-bin.tar.gz -C /opt/module/ ` && mv /opt/module/apache-zookeeper-3.5.7-bin ${zookeeper} COPY --chown =minglog:minglog software/apache-hive-3.1.2-bin.tar.gz /opt/software RUN tar -zxvf /opt/software/apache-hive-3.1.2-bin.tar.gz -C /opt/module/ \ && mv /opt/module/apache-hive-3.1.2-bin ${hive} RUN mkdir -p /opt/software/mysql_jars COPY --chown =minglog:minglog software/mysql-5.7.28-1.el7.x86_64.rpm-bundle.tar /opt/software USER rootRUN tar -xf /opt/software/mysql-5.7.28-1.el7.x86_64.rpm-bundle.tar -C /opt/software/mysql_jars && cd /opt/software/mysql_jars \ && rpm -ivh mysql-community-common-5.7.28-1.el7.x86_64.rpm \ && rpm -ivh mysql-community-libs-5.7.28-1.el7.x86_64.rpm \ && rpm -ivh mysql-community-libs-compat-5.7.28-1.el7.x86_64.rpm \ && rpm -ivh mysql-community-client-5.7.28-1.el7.x86_64.rpm \ && rpm -ivh mysql-community-server-5.7.28-1.el7.x86_64.rpm \ && rm -rf /opt/software/* USER minglogRUN mkdir /home/minglog/bin/ COPY --chown =minglog:minglog sh/* /home/minglog/bin/ RUN chmod 777 -R /home/minglog/bin COPY --chown =minglog:minglog hadoop_conf/ ${hadoop} / COPY --chown =minglog:minglog zookeeper_conf/ ${zookeeper} / COPY --chown =minglog:minglog mysql_conf/ /etc/ COPY --chown =minglog:minglog hive_conf/ ${hive} / EXPOSE 22 -65535 USER rootCOPY sh/my.sh /etc/profile.d/

在software文件夹下还需要增加Mysql和Hive安装包。

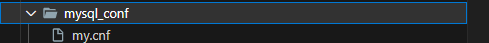

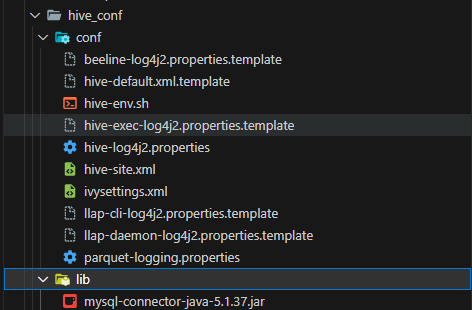

配置文件也需要增加Mysql和Hive的配置文件

此外,由于Mysql需要初始化并修改密码后才可以使用,故首先编写mysqlInit脚本,用于将mysql进行初始化,并修改密码为123456。

mysqlInit

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 #!/bin/bash mysql_passwd=123456 echo "开始初始化mysql数据库..." sudo rm -rf /var/lib/mysql/* \ && sudo mysqld --initialize --user=mysql \ && sudo systemctl start mysqld echo "数据库初始化成功,2秒后开始重置初始密码。" sleep 2sudo mysqladmin -uroot -p$(sudo cat /var/log/mysqld.log|grep root@|awk '{print$11}' ) password Minglog123456++ sudo mysql -uroot -pMinglog123456++ -e "set global validate_password_policy=LOW;" sudo mysql -uroot -pMinglog123456++ -e "set global validate_password_length=6;" sudo mysql -uroot -pMinglog123456++ -e "set password = password('$mysql_passwd ');" sudo mysql -uroot -p$mysql_passwd -e "update mysql.user set host='%' where user='root';" sudo mysql -uroot -p$mysql_passwd -e "flush privileges;" sudo mysql -uroot -p$mysql_passwd -e "create database metastore;" echo "$(hostname) Mysql初始化成功,密码修改为$mysql_passwd ."

Hive需要进行初始化才可以使用,将前面我们使用的Hive2脚本修改为如下内容,自动检测是否进行初始化。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 #!/bin/bash HIVE_LOG_DIR=$HIVE_HOME /logs if [ ! -d $HIVE_LOG_DIR ]then mkdir -p $HIVE_LOG_DIR fi function check_process { pid=$(ps -ef 2>/dev/null | grep -v grep | grep -i $1 | awk '{print $2}' ) ppid=$(netstat -nltp 2>/dev/null | grep $2 | awk '{print $7}' | cut -d '/' -f 1) echo $pid [[ "$pid " =~ "$ppid " ]] && [ "$ppid " ] && return 0 || return 1 } function hive_init { if [ ! -e /opt/module/hive-3.1.2/logs/hive.log ] then echo "检测到当前hive没有初始化,2秒后开始初始化..." sleep 2 schematool -initSchema -dbType mysql -verbose echo "namenode初始化成功,2秒后开启hive服务..." sleep 2 fi } function hive_start { metapid=$(check_process HiveMetaStore 9083) cmd="nohup hive --service metastore >$HIVE_LOG_DIR /metastore.log 2>&1 &" cmd=$cmd " sleep 4; hdfs dfsadmin -safemode wait >/dev/null 2>&1" [ -z "$metapid " ] && eval $cmd || echo "Metastroe服务已启动" echo "Metastroe服务已启动" server2pid=$(check_process HiveServer2 10000) cmd="nohup hive --service hiveserver2 >$HIVE_LOG_DIR /hiveServer2.log 2>&1 &" [ -z "$server2pid " ] && eval $cmd || echo "HiveServer2服务已启动" echo "HiveServer2服务已启动" } function hive_stop { metapid=$(check_process HiveMetaStore 9083) [ "$metapid " ] && kill $metapid || echo "Metastore服务未启动" server2pid=$(check_process HiveServer2 10000) [ "$server2pid " ] && kill $server2pid || echo "HiveServer2服务未启动" } case $1 in "start" ) hive_init hive_start ;; "stop" ) hive_stop ;; "restart" ) hive_stop sleep 2 hive_start ;; "status" ) check_process HiveMetaStore 9083 >/dev/null && echo "Metastore服务运行正常" || echo "Metastore服务运行异常" check_process HiveServer2 10000 >/dev/null && echo "HiveServer2服务运行正常" || echo "HiveServer2服务运行异常" ;; *) echo Invalid Args! echo 'Usage: ' $(basename $0 )' start|stop|restart|status' ;; esac

Hadoop102镜像 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 FROM hadoop_base:1.0 LABEL minglog="luoming@tipdm.com" RUN mkdir /root/.ssh ` && ssh-keygen -t rsa -N '' -f /root/.ssh/id_rsa -q USER minglogRUN mkdir /home/minglog/.ssh ` && ssh-keygen -t rsa -N '' -f /home/minglog/.ssh/id_rsa -q USER rootRUN echo "restrict 192.168.128.0 mask 255.255.255.0 nomodify notrap" >> /etc/ntp.conf \ && sed -i '/^server/' d /etc/ntp.conf ` && echo "server 127.127.1.0" >> /etc/ntp.conf \ && echo "fudge 127.127.1.0 stratum 10" >> /etc/ntp.conf \ && echo "SYNC_HWCLOCK=yes" >> /etc/sysconfig/ntpd USER minglogRUN mkdir /opt/module/zookeeper-3.5.7/zkData \ && echo "2" >> "/opt/module/zookeeper-3.5.7/zkData/myid" USER root

Hadoop103镜像 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 FROM hadoop_base:1.0 LABEL minglog="luoming@tipdm.com" USER minglogRUN mkdir /home/minglog/.ssh ` && ssh-keygen -t rsa -N '' -f /home/minglog/.ssh/id_rsa -q \ && mkdir /opt/module/zookeeper-3.5.7/zkData \ && echo "3" >> "/opt/module/zookeeper-3.5.7/zkData/myid" USER rootRUN mkdir /root/.ssh ` && ssh-keygen -t rsa -N '' -f /root/.ssh/id_rsa -q \ && mkdir -p /var/spool/cron \ && echo "*/1 * * * * /usr/sbin/ntpdate hadoop102" >> /var/spool/cron/root \ && echo "sed -i 's/hadoop102/hadoop103/g' /opt/module/hive-3.1.2/conf/hive-site.xml" >> /etc/profile.d/my.sh

Hadoop104镜像 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 FROM hadoop_base:1.0 LABEL minglog="luoming@tipdm.com" USER minglogRUN mkdir /home/minglog/.ssh ` && ssh-keygen -t rsa -N '' -f /home/minglog/.ssh/id_rsa -q \ && mkdir /opt/module/zookeeper-3.5.7/zkData \ && echo "4" >> "/opt/module/zookeeper-3.5.7/zkData/myid" USER rootRUN mkdir /root/.ssh ` && ssh-keygen -t rsa -N '' -f /root/.ssh/id_rsa -q \ && mkdir -p /var/spool/cron \ && echo "*/1 * * * * /usr/sbin/ntpdate hadoop102" >> /var/spool/cron/root && echo "sed -i 's/hadoop102/hadoop104/g' /opt/module/hive-3.1.2/conf/hive-site.xml" >> /etc/profile.d/my.sh