数据说明 数据来源:清华大学的THUCNews新闻文本分类数据集

训练集:180000

验证集:10000

测试集:10000

总共来自十个类别:金融,房产,股票,教育,科学,社会,政治,体育,游戏,娱乐

数据文件说明:

class.txt:类别文件

vocab.pkl:词汇表

train.txt:训练集

test.txt:测试集

dev.txt:验证集

embedding_SougouNews.npz:搜狗的Embedding预训练权重

embedding_Tencent.npz:腾讯的Embedding预训练权重

注意:这两个预训练权重选取一个即可。

数据读取 1 2 3 4 5 import numpy as npembedding_weight_file = np.load('../data/embedding_SougouNews.npz' ) embedding_weight = embedding_weight_file['embeddings' ] embedding_weight.shape

(4762, 300)

1 2 3 4 import pickle as pklvocab = pkl.load(open ('../data/vocab.pkl' , 'rb' )) print (f'词汇表大小:{len (vocab)} ' )

词汇表大小:4762

1 list (vocab.items())[-5 :]

[('蚱', 4757), ('§', 4758), ('霎', 4759), ('<UNK>', 4760), ('<PAD>', 4761)]

1 2 3 4 5 with open ('../data/class.txt' , 'r' ) as f: labels = f.readlines() labels = np.array([line.strip() for line in labels]) labels

array(['finance', 'realty', 'stocks', 'education', 'science', 'society',

'politics', 'sports', 'game', 'entertainment'], dtype='<U13')

1 2 3 4 5 6 7 8 import pandas as pdtrain_data = pd.read_csv('../data/train.txt' , sep='\t' , header=None ) test_data = pd.read_csv('../data/test.txt' , sep='\t' , header=None ) val_data = pd.read_csv('../data/dev.txt' , sep='\t' , header=None ) print ('Train: ' , train_data.shape)print ('Test: ' , test_data.shape)print ('Val: ' , val_data.shape)

Train: (180000, 2)

Test: (10000, 2)

Val: (10000, 2)

文本预处理 将句子转化为字构成的列表 1 2 3 train_data[0 ] = train_data[0 ].apply(list ) test_data[0 ] = test_data[0 ].apply(list ) val_data[0 ] = val_data[0 ].apply(list )

0 [中, 华, 女, 子, 学, 院, :, 本, 科, 层, 次, 仅, 1, 专, 业, ...

1 [两, 天, 价, 网, 站, 背, 后, 重, 重, 迷, 雾, :, 做, 个, 网, ...

2 [东, 5, 环, 海, 棠, 公, 社, 2, 3, 0, -, 2, 9, 0, 平, ...

3 [卡, 佩, 罗, :, 告, 诉, 你, 德, 国, 脚, 生, 猛, 的, 原, 因, ...

4 [8, 2, 岁, 老, 太, 为, 学, 生, 做, 饭, 扫, 地, 4, 4, 年, ...

...

179995 [侧, 滑, 掌, 上, P, S, P, 手, 机, , 索, 爱, X, p, e, ...

179996 [皖, 通, 高, 速, 跌, 0, ., 8, %, , 国, 元, 香, 港, 给, ...

179997 [《, 无, 限, 江, 湖, 》, 近, 日, 更, 新]

179998 [廊, 坊, 十, 九, 城, 邦, 别, 墅, 现, 内, 部, 认, 购, 9, 6, ...

179999 [连, 续, 上, 涨, 7, 个, 月, , 糖, 价, 7, 月, 可, 能, 出, ...

Name: 0, Length: 180000, dtype: object

对句子进行pad操作 当句子长度过长时进行截断,当句子过短时进行填补

1 2 3 4 5 6 def pad_sequence (word_list, pad_size=32 , pad_word='<PAD>' ): word_length = len (word_list) if word_length < pad_size: return word_list + [pad_word] * (pad_size - word_length) else : return word_list[:pad_size]

1 2 3 train_data[0 ] = train_data[0 ].apply(pad_sequence) test_data[0 ] = test_data[0 ].apply(pad_sequence) val_data[0 ] = val_data[0 ].apply(pad_sequence)

0 [中, 华, 女, 子, 学, 院, :, 本, 科, 层, 次, 仅, 1, 专, 业, ...

1 [两, 天, 价, 网, 站, 背, 后, 重, 重, 迷, 雾, :, 做, 个, 网, ...

2 [东, 5, 环, 海, 棠, 公, 社, 2, 3, 0, -, 2, 9, 0, 平, ...

3 [卡, 佩, 罗, :, 告, 诉, 你, 德, 国, 脚, 生, 猛, 的, 原, 因, ...

4 [8, 2, 岁, 老, 太, 为, 学, 生, 做, 饭, 扫, 地, 4, 4, 年, ...

...

179995 [侧, 滑, 掌, 上, P, S, P, 手, 机, , 索, 爱, X, p, e, ...

179996 [皖, 通, 高, 速, 跌, 0, ., 8, %, , 国, 元, 香, 港, 给, ...

179997 [《, 无, 限, 江, 湖, 》, 近, 日, 更, 新, <PAD>, <PAD>, <...

179998 [廊, 坊, 十, 九, 城, 邦, 别, 墅, 现, 内, 部, 认, 购, 9, 6, ...

179999 [连, 续, 上, 涨, 7, 个, 月, , 糖, 价, 7, 月, 可, 能, 出, ...

Name: 0, Length: 180000, dtype: object

将字映射为词汇表中的ID 1 2 UNK = '<UNK>' UNK_idx = vocab.get(UNK)

1 2 3 4 5 6 def word2idx (word_list, vocab=vocab ): new_word_list = [] for word in word_list: new_word_list.append(vocab.get(word, vocab.get(UNK))) return new_word_list

1 2 3 train_data[0 ] = train_data[0 ].apply(word2idx) test_data[0 ] = test_data[0 ].apply(word2idx) val_data[0 ] = val_data[0 ].apply(word2idx)

0 [14, 125, 55, 45, 35, 307, 4, 81, 161, 941, 25...

1 [135, 80, 33, 54, 505, 1032, 70, 95, 95, 681, ...

2 [152, 13, 469, 87, 2416, 36, 725, 3, 10, 1, 61...

3 [278, 1231, 344, 4, 304, 762, 589, 252, 6, 120...

4 [24, 3, 348, 187, 518, 73, 35, 17, 486, 1464, ...

...

179995 [1916, 1128, 1303, 23, 111, 118, 111, 79, 47, ...

179996 [3537, 148, 19, 648, 247, 1, 104, 24, 163, 0, ...

179997 [20, 178, 644, 395, 503, 21, 368, 29, 526, 15,...

179998 [2115, 1929, 382, 687, 149, 1394, 343, 541, 67...

179999 [347, 221, 23, 209, 41, 179, 38, 0, 1096, 33, ...

Name: 0, Length: 180000, dtype: object

此时文本预处理工作已经完成,接下来开始制作数据管道

制作数据管道 1 2 3 import torchimport torch.nn as nnimport torch.nn.functional as F

1 from torch.utils.data import Dataset, DataLoader

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 class TextDataset (Dataset ): def __init__ (self, data ): self.x = torch.LongTensor(data[0 ]) self.y = torch.LongTensor(data[1 ]) def __getitem__ (self,index ): self.text = self.x[index] self.label = self.y[index] return self.text, self.label def __len__ (self ): return len (self.x)

1 2 3 train_dataset = TextDataset(train_data) test_dataset = TextDataset(test_data) val_dataset = TextDataset(val_data)

(tensor([ 14, 125, 55, 45, 35, 307, 4, 81, 161, 941, 258, 494,

2, 175, 48, 145, 97, 17, 4761, 4761, 4761, 4761, 4761, 4761,

4761, 4761, 4761, 4761, 4761, 4761, 4761, 4761]),

tensor(3))

模型搭建 FastText模型 FastText 的原理是将短文本中的所有词向量进行平均,然后直接接softmax层,同时加入一些n-gram 特征的 trick 来捕获局部序列信息。相对于其它文本分类模型,如SVM,Logistic Regression和Neural Network等模型,FastText在保持分类效果的同时,大大缩短了训练时间,同时支持多语言表达

但其模型是基于词袋针对英文的文本分类方法,组成英文句子的单词是有间隔的,而应用于中文文本,需分词去标点转化为模型需要的数据格式。

在这里,由于数据量比较大进行分词和n-gram特征计算后会导致数据量爆炸式增长,故我们没有原始句子进行分词处理和计算n-gram特征,想要取得更好的效果在硬件条件足够的情况下可以对句子进行分词,然后计算n-gram特征。

1 2 3 4 5 6 7 8 9 10 11 12 13 class FastTextModel (nn.Module): def __init__ (self, embedding_pretrained, output_dim ): super (FastTextModel, self).__init__() embedding_dim = embedding_weight.shape[1 ] self.embedding = nn.Embedding.from_pretrained(embedding_pretrained, freeze=False ) self.fc = nn.Linear(embedding_dim, output_dim) def forward (self, x ): x = self.embedding(x) x = x.mean(dim=1 ) x = self.fc(x) return x

1 2 3 4 5 6 embedding_pretrained = torch.Tensor(embedding_weight) output_dim = 10 fastText_model = FastTextModel(embedding_pretrained, output_dim) fastText_model

FastTextModel(

(embedding): Embedding(4762, 300)

(fc): Linear(in_features=300, out_features=10, bias=True)

)

1 2 3 test_tensor = torch.randint(high=200 , size=(16 , 32 )) fastText_model(test_tensor).shape

torch.Size([16, 10])

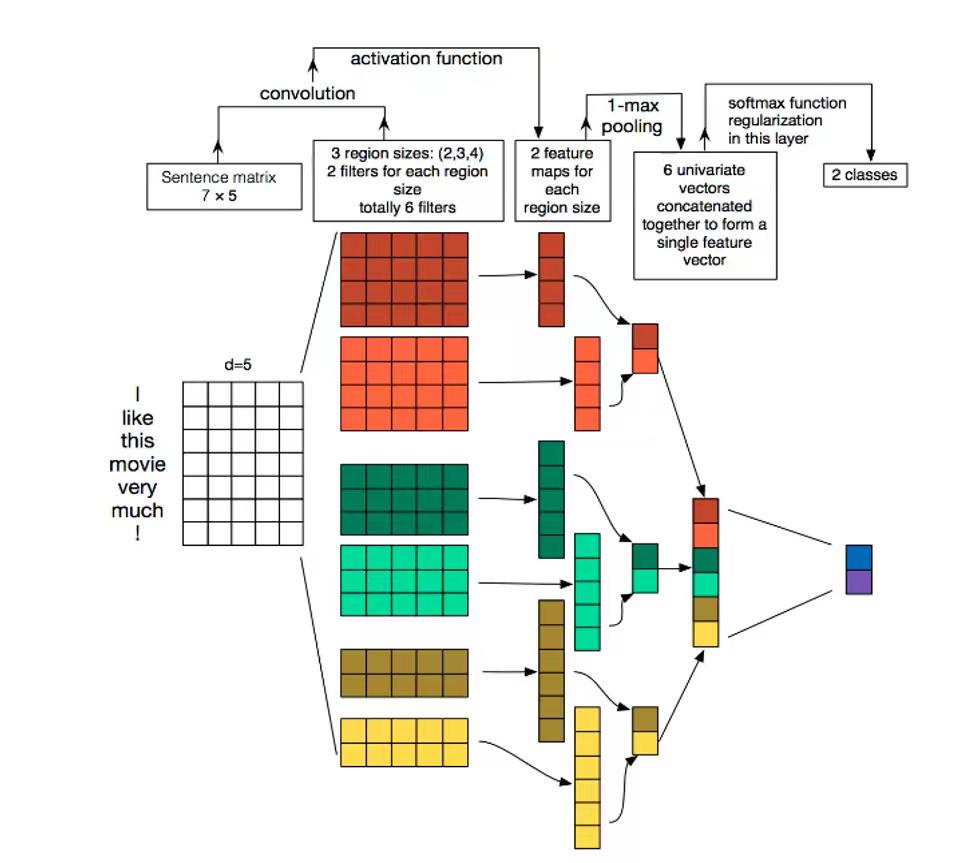

TextCNN模型

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 class TextCNNModel (nn.Module): def __init__ (self, embedding_pretrained, num_filters, filter_sizes, output_dim, dropout ): super (TextCNNModel, self).__init__() embedding_dim = embedding_weight.shape[1 ] self.embedding = nn.Embedding.from_pretrained(embedding_pretrained, freeze=False ) self.convs = nn.ModuleList([ nn.Conv2d(in_channels=1 , out_channels=num_filters, kernel_size=(fs, embedding_dim)) for fs in filter_sizes ]) self.dropout = nn.Dropout(dropout) self.fc = nn.Linear(len (filter_sizes) * num_filters, output_dim) def forward (self, x ): x = self.embedding(x).unsqueeze(1 ) convds = [cov(x) for cov in self.convs] relus = [F.relu(convd.squeeze(3 )) for convd in convds] pooleds = [F.max_pool1d(relu, relu.shape[2 ]).squeeze(2 ) for relu in relus] x = torch.cat(pooleds, dim=1 ) x = self.dropout(x) x = self.fc(x) return x

1 2 3 4 5 6 7 8 9 embedding_pretrained = torch.Tensor(embedding_weight) num_filters = 2 filter_sizes = [5 , 6 , 7 ] output_dim = 10 dropout = 0.5 textCNN_model = TextCNNModel(embedding_pretrained, num_filters, filter_sizes, output_dim, dropout) textCNN_model

TextCNNModel(

(embedding): Embedding(4762, 300)

(convs): ModuleList(

(0): Conv2d(1, 2, kernel_size=(5, 300), stride=(1, 1))

(1): Conv2d(1, 2, kernel_size=(6, 300), stride=(1, 1))

(2): Conv2d(1, 2, kernel_size=(7, 300), stride=(1, 1))

)

(dropout): Dropout(p=0.5, inplace=False)

(fc): Linear(in_features=6, out_features=10, bias=True)

)

1 2 3 test_tensor = torch.randint(high=200 , size=(16 , 32 )) textCNN_model(test_tensor).shape

torch.Size([16, 10])

LSTM模型 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 class LSTMModel (nn.Module): def __init__ (self, embedding_pretrained, hidde_dim, output_dim ): super (LSTMModel, self).__init__() embedding_dim = embedding_weight.shape[1 ] self.embedding = nn.Embedding.from_pretrained(embedding_pretrained, freeze=False ) self.lstm = nn.LSTM(embedding_dim, hidde_dim, num_layers=2 , bidirectional=True , batch_first=True ) self.fc = nn.Linear(hidde_dim * 2 , output_dim) def forward (self, x ): x = self.embedding(x) x, _ = self.lstm(x) x = self.fc(x[:, -1 , :]) return x

1 2 3 4 5 6 7 embedding_pretrained = torch.Tensor(embedding_weight) hidde_dim = 128 output_dim = 10 lstm_model = LSTMModel(embedding_pretrained, hidde_dim, output_dim) lstm_model

LSTMModel(

(embedding): Embedding(4762, 300)

(lstm): LSTM(300, 128, num_layers=2, batch_first=True, bidirectional=True)

(fc): Linear(in_features=256, out_features=10, bias=True)

)

1 2 3 test_tensor = torch.randint(high=200 , size=(16 , 32 )) lstm_model(test_tensor).shape

torch.Size([16, 10])

初始化模型权重参数 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 def init_network (model, method='xavier' , exclude='embedding' , seed=123 ): for name, w in model.named_parameters(): if exclude not in name: if 'weight' in name: if method == 'xavier' : nn.init.xavier_normal_(w) elif method == 'kaiming' : nn.init.kaiming_normal_(w) else : nn.init.normal_(w) elif 'bias' in name: nn.init.constant_(w, 0 ) else : pass

1 2 3 init_network(fastText_model) init_network(textCNN_model) init_network(lstm_model)

定义训练日志类 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 class PrintLog : def __init__ (self, log_filename ): self.log_filename = log_filename def print_ (self, message, only_file=False , add_time=True , cost_time=None ): if add_time: current_time = time.strftime("%Y-%m-%d %H-%M-%S" , time.localtime()) message = f"Time {current_time} : {message} " if cost_time: message = f"{message} Cost Time: {cost_time} s" with open (self.log_filename, 'a' ) as f: f.write(message + '\n' ) if not only_file: print (message)

模型训练 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 def train (model, device, train_loader, criterion, optimizer, epoch, pring_log ): model.train() total = 0 start_time = time.time() for batch_idx, (data, target) in enumerate (train_loader): data, target = data.to(device), target.to(device) optimizer.zero_grad() output = model(data) loss = criterion(output, target) loss.backward() optimizer.step() total += len (data) progress = math.ceil(batch_idx / len (train_loader) * 50 ) print ("\rTrain epoch %d: %d/%d, [%-51s] %d%%" % (epoch, total, len (train_loader.dataset), '-' * progress + '>' , progress * 2 ), end='' ) end_time = time.time() epoch_cost_time = end_time - start_time print () pring_log.print_(f"Train epoch {epoch} , Loss {loss} " , cost_time=epoch_cost_time)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 def test (model, device, test_loader, criterion, pring_log ): model.eval () test_loss = 0 correct = 0 with torch.no_grad(): for data, target in test_loader: data, target = data.to(device), target.to(device) output = model(data) test_loss += criterion(output, target).item() pred = output.argmax(dim=1 , keepdim=True ) correct += pred.eq(target.view_as(pred)).sum ().item() test_loss /= len (test_loader.dataset) pring_log.print_('Test: average loss: {:.4f}, accuracy: {}/{} ({:.0f}%)' .format ( test_loss, correct, len (test_loader.dataset), 100. * correct / len (test_loader.dataset))) return test_loss

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 def run_train (model, epochs, train_loader, test_loader, device, resume="" , model_name="" ): log_filename = f"{model_name} _training.log" pring_log = PrintLog(log_filename) model.to(device) optimizer = torch.optim.SGD(model.parameters(), lr=0.001 , momentum=0.9 ) criterion = nn.CrossEntropyLoss() min_loss = torch.inf start_epoch = 0 delta = 1e-4 if resume: pring_log.print_(f'loading from {resume} ' ) checkpoint = torch.load(resume, map_location=torch.device("cuda:0" )) model.load_state_dict(checkpoint['model_state_dict' ]) optimizer.load_state_dict(checkpoint['optimizer_state_dict' ]) start_epoch = checkpoint['epoch' ] min_loss = checkpoint['loss' ] for epoch in range (start_epoch + 1 , start_epoch + epochs+1 ): train(model, device, train_loader, criterion, optimizer, epoch, pring_log) loss = test(model, device, test_loader, criterion, pring_log) if loss < min_loss and not torch.isclose(torch.tensor([min_loss]), torch.tensor([loss]), delta): pring_log.print_(f'Loss Reduce {min_loss} to {loss} ' ) min_loss = loss save_file = f'{model_name} _checkpoint_best.pt' torch.save({ 'epoch' : epoch+1 , 'model_state_dict' : model.state_dict(), 'optimizer_state_dict' : optimizer.state_dict(), 'loss' : loss }, save_file) pring_log.print_(f'Save checkpoint to {save_file} ' ) print ('----------------------------------------' )

FastText模型训练 1 2 torch.cuda.empty_cache()

1 2 3 4 5 6 7 8 9 10 epochs = 100 batch_size = 1024 device = torch.device('cuda' if torch.cuda.is_available() else 'cpu' ) train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True ) test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=True ) run_train(fastText_model, epochs, train_loader, test_loader, device, model_name='FastText' )

Train epoch 1: 180000/180000, [-------------------------------------------------->] 100%

Time 2023-10-30 14-56-51 : Train epoch 1, Loss 2.2960190773010254 Cost Time: 7.86016058921814s

Time 2023-10-30 14-56-51 : Test: average loss: 0.0023, accuracy: 1012/10000 (10%)

Time 2023-10-30 14-56-51 : Loss Reduce inf to 0.0022928255558013915

Time 2023-10-30 14-56-51 : Save checkpoint to FastText_checkpoint_best.pt

......

Train epoch 99: 180000/180000, [-------------------------------------------------->] 100%

Time 2023-10-30 14-59-24 : Train epoch 99, Loss 1.4620513916015625 Cost Time: 1.5067195892333984s

Time 2023-10-30 14-59-24 : Test: average loss: 0.0015, accuracy: 6437/10000 (64%)

Time 2023-10-30 14-59-24 : Loss Reduce 0.0015308248996734619 to 0.0015263622641563416

Time 2023-10-30 14-59-24 : Save checkpoint to FastText_checkpoint_best.pt

----------------------------------------

Train epoch 100: 180000/180000, [-------------------------------------------------->] 100%

Time 2023-10-30 14-59-25 : Train epoch 100, Loss 1.5207431316375732 Cost Time: 1.591019868850708s

Time 2023-10-30 14-59-26 : Test: average loss: 0.0015, accuracy: 6434/10000 (64%)

Time 2023-10-30 14-59-26 : Loss Reduce 0.0015263622641563416 to 0.0015218000650405884

Time 2023-10-30 14-59-26 : Save checkpoint to FastText_checkpoint_best.pt

----------------------------------------

TextCNN模型训练 1 2 torch.cuda.empty_cache()

1 2 3 4 5 6 7 8 9 10 epochs = 100 batch_size = 1024 device = torch.device('cuda' if torch.cuda.is_available() else 'cpu' ) train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True ) test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=True ) run_train(textCNN_model, epochs, train_loader, test_loader, device, model_name='TextCNN' )

Train epoch 1: 180000/180000, [-------------------------------------------------->] 100%

Time 2023-10-30 14-59-52 : Train epoch 1, Loss 2.2562575340270996 Cost Time: 21.60412096977234s

Time 2023-10-30 14-59-53 : Test: average loss: 0.0022, accuracy: 2353/10000 (24%)

Time 2023-10-30 14-59-53 : Loss Reduce inf to 0.0022334169387817383

Time 2023-10-30 14-59-53 : Save checkpoint to TextCNN_checkpoint_best.pt

......

Train epoch 99: 180000/180000, [-------------------------------------------------->] 100%

Time 2023-10-30 15-30-18 : Train epoch 99, Loss 1.6620413064956665 Cost Time: 17.734992504119873s

Time 2023-10-30 15-30-19 : Test: average loss: 0.0013, accuracy: 7021/10000 (70%)

Time 2023-10-30 15-30-19 : Loss Reduce 0.0012574271082878112 to 0.0012544285655021667

Time 2023-10-30 15-30-19 : Save checkpoint to TextCNN_checkpoint_best.pt

----------------------------------------

Train epoch 100: 180000/180000, [-------------------------------------------------->] 100%

Time 2023-10-30 15-30-37 : Train epoch 100, Loss 1.6703954935073853 Cost Time: 17.817910194396973s

Time 2023-10-30 15-30-38 : Test: average loss: 0.0013, accuracy: 7042/10000 (70%)

----------------------------------------

LSTM模型训练 1 2 torch.cuda.empty_cache()

1 2 3 4 5 6 7 8 9 10 epochs = 100 batch_size = 256 device = torch.device('cuda' if torch.cuda.is_available() else 'cpu' ) train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True ) test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=True ) run_train(lstm_model, epochs, train_loader, test_loader, device, model_name='LSTM' )

Train epoch 1: 180000/180000, [-------------------------------------------------->] 100%

Time 2023-10-30 18-17-10 : Train epoch 1, Loss 2.3043763637542725 Cost Time: 13.364792108535767s

Time 2023-10-30 18-17-10 : Test: average loss: 0.0092, accuracy: 1006/10000 (10%)

Time 2023-10-30 18-17-10 : Loss Reduce inf to 0.00920427725315094

Time 2023-10-30 18-17-10 : Save checkpoint to LSTM_checkpoint_best.pt

......

Train epoch 99: 180000/180000, [-------------------------------------------------->] 100%

Time 2023-10-30 18-38-50 : Train epoch 99, Loss 0.4723755419254303 Cost Time: 13.12613320350647s

Time 2023-10-30 18-38-50 : Test: average loss: 0.0014, accuracy: 8833/10000 (88%)

----------------------------------------

Train epoch 100: 180000/180000, [-------------------------------------------------->] 100%

Time 2023-10-30 18-39-03 : Train epoch 100, Loss 0.30108553171157837 Cost Time: 13.052341938018799s

Time 2023-10-30 18-39-04 : Test: average loss: 0.0014, accuracy: 8922/10000 (89%)

----------------------------------------

模型验证 1 2 3 4 5 6 embedding_pretrained = torch.Tensor(embedding_weight) hidde_dim = 128 output_dim = 10 lstm_model = LSTMModel(embedding_pretrained, hidde_dim, output_dim)

1 2 device = torch.device('cuda' if torch.cuda.is_available() else 'cpu' )

1 2 3 4 5 resume = f'LSTM_checkpoint_best.pt' if resume: print (f'loading from {resume} ' ) checkpoint = torch.load(resume, map_location=torch.device(device)) lstm_model.load_state_dict(checkpoint['model_state_dict' ])

loading from LSTM_checkpoint_best.pt

1 2 val_loader = DataLoader(val_dataset, batch_size=5 , shuffle=True )

1 2 data, target = list (val_loader)[0 ]

1 2 3 4 lstm_model.to(device) pred = lstm_model(data.to(device)).argmax(dim=1 ) pred

tensor([4, 0, 2, 0, 4], device='cuda:0')

tensor([4, 0, 2, 0, 4])

1 2 3 4 5 6 7 8 def idx2word (idx_list, vocab=vocab ): vocab = {j:i for i, j in vocab.items()} new_word_list = [] for word in idx_list: new_word_list.append(vocab.get(word)) return new_word_list

1 2 ['' .join(idx2word(idx.tolist())).replace(PAD, '' ) for idx in data]

['功能最齐全入门单反 佳能450D套装4799',

'分级基金配对转换今开通',

'亨氏第一财季盈利超预期增长13%',

'证监会就分级基金产品审核指引征求意见',

'知识产权第一案追踪谁开发布道者软件']

array(['science', 'finance', 'stocks', 'finance', 'science'], dtype='<U13')